A Comparison of Solid State Drives to Serial ATA (SATA) Drive Arrays for Use with Oracle

One of the more interesting projects I participated in this year involved the comparison of a 64-gigabyte solid-state drive (SSD) from Texas Memory Systems to a serial ATA (SATA) array. The serial ATA array contained 7 — 7500 RPM 200-gigabyte drives in a RAID5 array setup. I had read about the performance possibilities of the SSD and was anxious to prove or disprove them for myself.

Logically, if you utilize a “memory” disk you are ridding yourself of the physical limitations of disk drive technology — basically trading in the spinning rust. In a disk drive, you are battling physics to squeeze more performance out of your array; there are certain physical properties that just can’t be altered on a whim (to quote Scotty from Star Trek, “Ya cannot change the laws of physics, Captain!”). Therefore, when you use standard disk technology, you are limited by the disk rotational speed and the actuator arm latency. Combined, these disk latencies result in the difference between “linear access rate” and “actual” or “random access rate”; usually the difference is as much as 40-50 percent of linear access rate. That is, if you can get 100 meg/second of linear access, you will get only 50-60 meg/second of random access. Therefore we must limit ourselves to the non-linear access rate for most Oracle applications.

A memory-based array has no rotational or actuator arm latency; linear or non-linear access is identical. Disk access rates are generally in the range of 0.5 milliseconds to 10 milliseconds. Typical average latencies for mechanical disks are seven - nine microseconds. Typical access rates for memory are measured not in milliseconds, but in nanoseconds (one billionth of a second versus one thousandth of a second) so we are immediately talking a factor of one hundred thousand. However, due to access speeds of various interfaces, and processing overhead of the CPU and I/O processor, this usually drops to a fraction of that. For example, a disk-based sort is usually only 17-37 times slower than a memory-based sort.

All of that being said, what did I find in my tests? The tests involved setting up a 20-gigabyte (data volume excluded indexes and support tablespaces and logs) TPCH database and then running the 22 basic TPCH-generated queries. Of course, the time to load the database tables using SQLLoader and the time required to build the indexes to support the queries was also measured.

The testing was started with a 2-disk SCSI array using 10K RPM Cheetah technology drives. But a third of the way through the second run of the queries, the drive array crashed hard and we had to use an available ATA array. It should be noted that the load times and index build times, as well as query times, were equivalent on the SCSI and ATA systems.

The average time to load a table using SSD versus ATA technology was around 30 percent. That is, the SSD based system loaded 30 percent faster than the ATA array. The index builds also showed nearly the same results: 30 percent faster for the SSD array. While not as spectacular as I hoped, a 30-percent timesaving could be fairly substantial in most environments. With trepidation, I moved to the SQL SELECT testing.

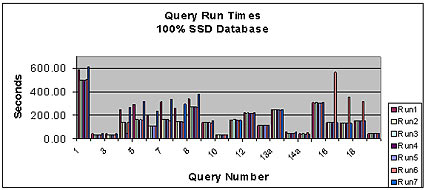

The first system to be tested was the SSD array. The TPCH qgen program was used to create the basic query set; the queries were placed into a single file and the SET TIMING clause was added to capture the execution times of each SQL statement to the spool file which was used to log each run. I loaded up the script and executed it in the SQLPLUS environment. I have to admit I was a bit disappointed when the first query took 9 minutes to run. I finished up the seven different runs of the base queries using different configurations (took a total of three days, part-time) and then cranked up the ATA array. The query results are shown in the following table:

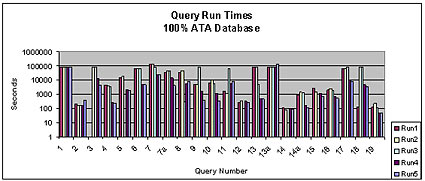

After the first SQL run on the ATA array, I felt much better. After 30 hours, I had to secure the first SQL query. Not the first run, just the first query! In fact, to run the entire series of 22 SQL statements on the ATA array took several days for each run. That is correct: one run of the test SQL on the ATA array took longer than all seven runs on the SSD array. The results for the ATA runs are shown in the next table. Notice that the scale on the vertical access of the graph is logarithmic. This means that for each factor of 10, the scale is the same height; this 1-10 takes up the same vertical distance as 10-100 and 100-1000, and so on. This use of the logarithmic scale was required to keep the poorest of the results from completely masking the reasonable results:

Note that any query that took longer than 30 hours (on the average) was stopped and the test run resumed on the next query. I have no idea if some of them would have ever come back with results on the ATA array.

Overall, based on elapsed time, the SSD array performed the queries 276 times faster than the ATA array. Note that this was using the 30-hour limitation on the queries. Had I waited for those long-running queries to complete, the difference may have been much greater.

The SSD drives were able to complete the entire run of example TPCH queries with no errors (after some initial tweaking of temporary tablespace size). Applying identical temporary tablespace sizes and all other settings being the same (database and OS wise), the scripts had to be modified to allow release of temporary segments through log-out and log-in of the database user. If the user wasn’t logged off and logged back in during the run, numerous errors occurred which usually resulted in ORA-1336 errors and forced disconnection of the user.

In summary, the SSD technology is not a silver bullet solution for database performance problems. If you are CPU-bound, the SSD drives may even make performance worse. You need to carefully evaluate your system to determine its wait profile and then only consider SSD technology if the majority of your wait activity is I/O related. However, I suspect that as memory becomes cheaper and cheaper (a gig costs about $1000 at the time of this writing) disks will only be used to provide a place to store the contents of SSD arrays upon power failures. Indeed, with the promise of quantum technology memory right around the proverbial corner (memory that uses the quantum state of the electron to store information, promising a factor of 20 increase of storage capacity in memory), disks may soon go the way of punch cards, paper tape, and teletype terminals.

--

Mike Ault is one of the leading names in Oracle technology. The author of more than 20 Oracle books and hundreds of articles in national publications, Mike Ault has five Oracle Masters Certificates and was the first popular Oracle author with his book Oracle7 Administration and Management. Mike also wrote several of the “Exam Cram” books, and enjoys a reputation as a leading author and Oracle consultant.

Mike has released his complete collection of Oracle scripts, covering every possible area of Oracle administration and management. The collection is available at: http://www.rampant-books.com/download_adv_mon_tuning.htm.

Contributors : Mike Ault

Last modified 2005-06-21 11:51 PM