Oracle 2020: A Glimpse Into the Future of Database Management - Part 2

Part 1 | Part 2

Inside Oracle 2020

The year is 2020 and we are taking a historical look at how Oracle database management has advanced over the past 15 years. As we noted in our first installment on Oracle 2020, the hardware advances preceded the changes to 21st century technology. It was only after vendors introduced the new hardware that Oracle databases responded to leverage the new hardware.

One of the greatest hardware-induced technology changes was the second age of mainframe computing which began in 2005 and continues today. Let’s take a look at the second age of mainframes and see how this architecture has changed our lives.

The Second Age of Mainframes

At the dawn of the 21st century, a push toward Grid computing began with Oracle10g, as well as a new trend called server consolidation. In both Grid computing and server consolidation, CPU and RAM resources were delivered on-demand as required by your application. In other words, the computing world went straight back to 1965 and re-entered the land of the large, single computer!

The new mainframe-like servers were fully redundant, providing complete hardware reliability for all server components including RAM, CPU and busses. It was clear that server consolidation was a trend that had many benefits and many companies dismantled their ancient distributed UNIX server farms and consolidated into a large single server with huge savings in both management and hardware costs:

- CPU speed continued to outpace memory speed. RAM speed had not improved since the 1970s. This meant that RAM sub-systems had to be localized to keep the CPUs running at full capacity.

- Platter disks were being replaced by solid-state RAM disk.

- Oracle databases were shifting from being I/O-bound to CPU-bound as a result of improved data caching.

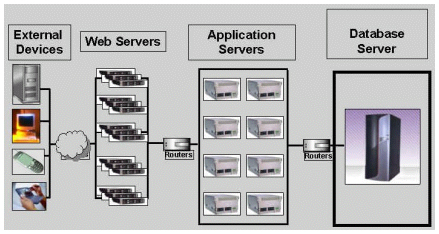

From a historical perspective, we must remember that the initial departure from the “glass house” mainframe was not motivated by any compelling technology. Rather, it was a pure matter of economics. The new mini-computers of the 1990s were far cheaper than mainframes and provided computing power with a lower hardware cost, but a higher human cost. This required more expensive system administrators and DBAs to manage the multiple servers. This low TCP led IT management to begin dismantling their mainframes, replacing them with hundreds of small UNIX-based minicomputers (refer to figure 1).

Figure 1: The multi-server architecture of the late 20th century.

In shops with multiple Oracle instances, consolidating onto a single large Windows server saved thousands of dollars in resource costs and provided better resource allocation. In many cases, the payback period for server consolidation was very fast, especially when the existing system had reached the limitations of the 32-bit architecture.

The proliferation of server farms had caused a huge surge in demand for Oracle DBA professionals. Multiple database servers may have represented job security for the DBA and system administration staff that maintained the servers, but they presented a serious and expensive challenge to IT management because they were far less effective than a monolithic mainframe solution:

- High expense — In large enterprise data centers, hardware resources were deliberately over-allocated in order to accommodate processing-load peaks.

- High waste — Because each Oracle instance resided on a single server, there was significant duplication of administration and maintenance, and a suboptimal utilization of RAM and CPU resources.

- Labor-intensive — In many large Oracle shops, a shuffle occurred when a database outgrew its server. A new server was purchased, and the database was moved to the new server. Another database was migrated onto the old server. This shuffling of databases between servers was a huge headache for the Oracle DBAs who were kept busy, after hours, moving databases to new server platforms.

When the new 16-, 32-, and 64-CPU servers were introduced in the early 21st century, it became clear to IT management that the savings in manpower would easily outweigh the costs of the monolithic hardware.

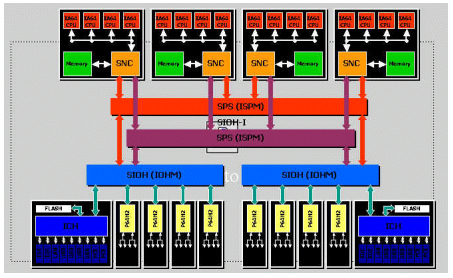

Oracle professionals realized that within a consolidated server you could easily add CPU and RAM resources to the server as your processing demands increase. This offered a fast, easy, and seamless growth path for the new mainframe computers of the early 21st century (refer to figure 2).

Figure 2: The Intel-CPU mainframe architecture of the early 21st century (Courtesy UNISYS).

Server consolidation technology not only greatly reduced the number of servers. It also reduced the amount of IT staff required to maintain the server software.

A single server meant a single copy of the Oracle software. Plus, the operating system controlled resource allocation and the server automatically balanced the demands of many Oracle instances for processing cycles and RAM resources. Of course, the Oracle DBA still maintained control of the RAM and CPU within the server, and they could dedicate Oracle instances to a fixed set of CPUs (using processor affinity) or adjust the CPU dispatching priority (the UNIX “nice” command) of important Oracle tasks.

If any CPU failed, the monolithic server would re-assign the processing without interruption. This offered a more affordable and simpler solution than Real Applications Clusters or Oracle Grid computing.

By consolidating server resources, the DBA had fewer servers to manage and they no longer needed to be concerned about outgrowing their server. But the server consolidation movement also meant that less Oracle DBAs were needed because there was no longer a need to repeat DBA tasks, over-and-over, on multiple servers. Let’s take a closer look at how the job of the Oracle DBA has changed.

Changing Role of the Oracle DBA in 2020

In the late 20th century, shops had dozens of Oracle DBA staff and important tasks were still overlooked because DBAs said “It’s not my job,” or “I don’t have time.” Changing technology mandated that the 21st century DBA would have more overall responsibility for the whole operation of their Oracle database.

Winner of the “It’s Not My Job” award.

So, what did this mean to the Oracle DBA of the early 21st century? Clearly, less time was spent installing and maintaining multiple copies of Oracle, and this freed up time for the DBA to pursue more advanced tasks such as SQL tuning and database performance optimization.

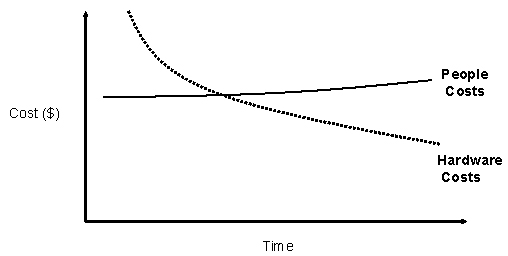

But the sad reality of server consolidation was that thousands of mediocre Oracle DBAs lost their jobs to this trend. The best DBAs continued to find work, but DBAs who were used for the repetitive tasks of installing upgrades on hundreds of small servers were displaced (refer to figure 3).

Figure 3: The changing dynamics of human and hardware costs.

The surviving Oracle DBAs found that they were relieved of the tedium of applying patches to multiple servers, constantly re-allocating server resources with Oracle Grid control, and constantly monitoring and tuning multiple systems. The DBA job role became far more demanding, and many companies started to view the DBA as a technical management position, encompassing far more responsibility than the traditional DBA.

Consequently, many computer professionals and Oracle DBAs were faced with a new requirement to have degrees in both computer science and business administration. The business administration allowed them to understand the working of internal systems and helped them to design the corporate database.

By 2015, the automation of many of the Oracle DBA functions led to the Oracle professional accepting responsibility for a whole new set of duties:

- Data modeling and Oracle database design

- Data interface protocols

- Managing data security

- Managing development projects

- Predicting future Oracle trends for hardware usage and user load

Now that we understand how the DBA job duties expanded in scope, let’s take a look at the evolution of the Oracle database over the past 15 years.

Inside Oracle 2020

The world of Oracle management is totally different today than it was back in 2004. We no longer have to worry about applying patches to Oracle software, all tuning is fully automated, and hundreds of Oracle instances all reside within a single company-wide server.

Looking remarkably like the access architectures of the 1970s, all Oracle access is done via disk-less Internet Appliances (IAs), very much like the 3270 dumb terminals from the mainframe days of the late 20th century. The worldwide high-speed network allows all software to be accessed from a single, master location, and everything including word processing, spreadsheets and databases are accessed in virtual space.

Software vendors save millions of dollars and they can instantly transmit patches and upgrades without service interruption. Best of all for the vendor, software piracy is completely eliminated.

The SQL-09 standard also simplified data access for relational databases. Forever removing the FROM and GROUP BY clauses, the SQL-09 standard made it easy to add artificial intelligent language pre-processors to natural language interfaces to Oracle data.

To see the difference, here is a typical SQL statement from the 20th century:

select

customer_name,

sum(purchases)

FROM

customer

natural join

ordor

WHERE

customer_location = ‘North Carolina’

GROUP BY

customer_name

ORDER BY

customer_name;

SQL-09 SQL removes the need for the FROM and GROUP BY clauses, pulling the table names from the data dictionary.

select

customer_name,

sum(purchases)

WHERE

customer_location = ‘North Carolina’

ORDER BY

customer_name;

Oracle’s cost-based SQL optimizer is now 100% effective and dynamic sampling ensures that the execution plan is optimal for every query.

We also see that all Oracle software is dynamically accessed over the Web and a single copy of Oracle executables is accessed from the main Oracle software server in Redwood Shores. Several hundred master copies of Oracle exist on the worldwide server and applying patches is a simple matter of re-directing your Oracle instance to pull the executables from another master copy of Oracle.

Oracle first started on-demand computing way back in 2004 when the Oracle10g Enterprise Manager would go to MetaLink and gather patch information for the DBA. This has been expanded to allow for all Oracle software to be instantly available by any Internet-enabled appliance.

Oracle’s Inter-Instance Database

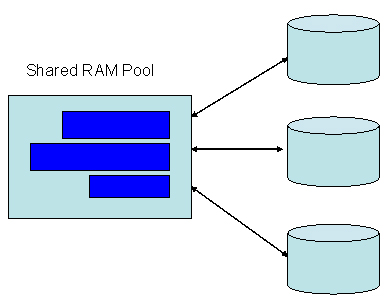

If any of you are old enough to remember back to 2008, Oracle abandoned Grid computing in favor of inter-instance sharing of RAM resources. As corporations migrated onto the large monolithic servers, companies began to move hundreds of Oracle instances into a single box. These servers were excellent at sharing CPU resources between instances, but RAM memory could not be freed by one instance to be used by another (refer to figure 4).

Figure 4: Oracle Inter-instance RAM architecture.

Oracle borrowed from their Real Application Clusters (RAC) and Automatic Memory Management (AMM) to create a new way for hundreds of Oracle instances to share RAM resources between instances. With RAM costs falling below $1,000 per Terabyte, multi-gigabyte data caches became commonplace.

All Oracle instances become self-managing during this time, and AMM ensured that every instance had on-demand RAM resources.

Solid-State Oracle Database

The first new generation of disk-less Oracle databases was introduced back in 2011 when Oracle 16ss debuted. Appropriate for all but the largest data warehouses, this disk-less architecture heralded a whole new way of managing Oracle data.

With the solid-state architecture, the old-fashioned RAM region called the SGA disappeared forever and was replaced with a new scheme that managed serialization, locks and latches directly within the RAM data blocks. The idea of “caching” was gone forever, and everything was available with nanosecond access speeds. With this greatly simplified architecture, Oracle was able to reduce the number of background processes required to manage Oracle and exploit the new solid-state disks. Oracle also improved the serialization mechanisms to make it easier to manage high volumes of simultaneous access. Oracle 16ss was the first commercial database to break the million transactions per second threshold.

Oracle3d Adds a New Dimension

With all Oracle database running in solid-state memory, the introduction of the new 32-state Gallium Arsenide chips with picosecond access speeds shifted the database bottleneck to the network. By now, all traditional wiring has been replaced by fiber optics and all system software is delivered over the web.

In 2016, Oracle 17-3d was introduced to add the temporal dimension to database management. Adding the third dimension of time, Oracle was able to exploit the ancient concept of their Flashback product to allow any Oracle database to be viewed as a dynamic object with the changes to the database available in real-time.

Where from here?

We have seen Oracle technology come a long way in the first twenty years of the 21st century all as a direct response to advances in hardware. As computing hardware continues to make advancements, Oracle will respond and incorporate the new hardware technology into their data engine.

While it’s always impossible to accurately predict the future, we can always take clues from the advances in hardware, knowing that Oracle databases will step up to utilize the new hardware advances within their database products and tools.

--

Donald K. Burleson is one of the world’s top Oracle Database experts with more than 20 years of full-time DBA experience. He specializes in creating database architectures for very large online databases and he has worked with some of the world’s most powerful and complex systems. A former Adjunct Professor, Don Burleson has written 15 books, published more than 100 articles in national magazines, serves as Editor-in-Chief of Oracle Internals and edits for Rampant TechPress. Don is a popular lecturer and teacher and is a frequent speaker at Oracle Openworld and other international database conferences. Don’s Web sites include DBA-Oracle, Remote-DBA, Oracle-training, remote support and remote DBA.

Contributors : Donald K. Burleson

Last modified 2006-01-05 10:03 AM